Text-to-CAD: Risks and Opportunities

- Reginald Raye

- May 15, 2023

- 5 min read

Updated: May 16, 2023

The dust has hardly formed, much less settled, when it comes to AI-powered text-to-image generation. Yet the result is already clear: a tidal wave of crummy images. There is some quality in the mix, to be sure, but not nearly enough to justify the damage done to the signal-to-noise ratio – for every artist who benefits from a Midjourney-generated album cover, there are fifty people duped by a Midjourney-generated deepfake. And in a world where declining signal-to-noise ratios are the root cause of so many ills (think scientific research, journalism, government accountability), this is not good.

It’s now necessary to view all images with suspicion. (This has admittedly long been the case, but the increasing incidence of deepfakes warrants a proportional increase in vigilance, which, apart from being simply unpleasant, is cognitively taxing.) Constant suspicion - or failing that, frequent misdirection - seems a high price to pay for a digital bauble that no one asked for, and offers as yet little in the way of upside. Hopefully - or perhaps more to the point, prayerfully - the cost-to-benefit ratio will soon enter saner territory.

But in the meantime, we should be aware of a new phenomenon in the generative AI world: AI-powered text-to-CAD generation. The premise is similar to that of text-to-image programs, just instead of an image, the programs return a 3D CAD model.

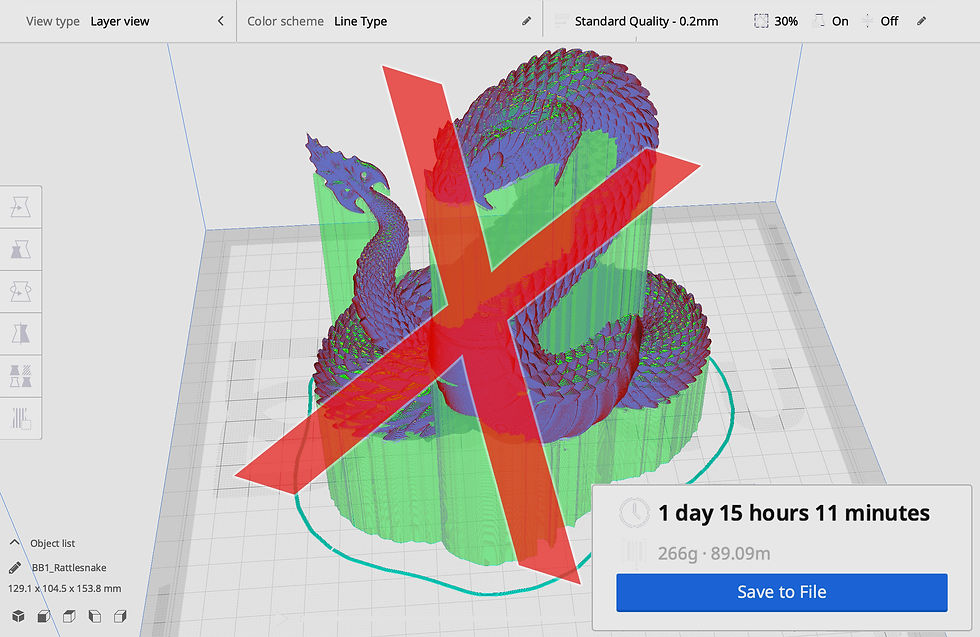

Smelling opportunity, major players have thrown their hats into the ring: Autodesk (CLIP-Forge), Google (DreamFusion), OpenAI (Point-E), and NVIDIA (Magic3D) have all recently created text-to-CAD tools, the output of which is shown below:

Major players have not deterred upstarts from popping up at the rate of nearly one a month, as of early 2023, among whom Sloyd is perhaps the most promising.

In addition, there are a number of fantastic tools that might be termed 2.5-D, as their output is somewhere between 2- and 3-D. The idea with these is that the user uploads an image, and the AI then makes a good guess as to how the image would look in 3D.

Open source animation and modeling platform Blender is, unsurprisingly, a leader in this space. And the CAD modeling software Rhino now has plugins such as SurfaceRelief and Ambrosinus Toolkit which do a great job of generating depth maps from simple images.

All of this, it should first be said, is exciting and cool and novel. As a CAD designer myself, I eagerly anticipate the potential benefits. And engineers, 3D printing hobbyists, and video game designers, among many others, likewise stand to benefit.

However, there are many downsides to text-to-CAD, many of them severe. A brief listing might include:

Opening the door to mass creation of weapons, and racist or otherwise objectionable material

Unleashing a tidal wave of crummy models, which then go on to pollute model repos

Abrogating the rights of content creators, whose work is copyrighted

Amplifying very-online western design at the expense of non-western design traditions (i.e. digital colonialism)

In any event, text-to-CAD is coming whether we want it or not. But, thankfully, there are a number of steps technologists can take to improve their platforms’ output and reduce their negative impacts. I’ve identified four key areas where such programs can level up: dataset curation, parameterization, pattern language, and filtering. To my knowledge, these areas remain largely unexplored in the text-to-CAD context.

Dataset Curation

Passive Curation

While not all approaches to text-to-CAD rely on a training set of 3D models (Google’s DreamFusion is one exception), curating a model dataset is still the most common approach. The key here, it scarcely bears mentioning, is to curate an awesome set of models for training.

And the key to doing that is twofold. First, technologists ought to avoid the obvious model sources: Thingiverse, Cults3D, MyMiniFactory. While high quality models are present there (TOMO’s among them ;) the vast majority are junk. Second, super-high-quality model repos should be sought out (Scan the World is perhaps the world's best).

Next, model sources can be weighted according to quality. Design PhD’s would likely jump at the chance to do this kind of labeling (and due to the inequities of the labor market, for peanuts).

Active Curation

Curation can and should take a more active role. Many museums, private collections, and design firms would gladly have their industrial design collections 3D scanned. Plus, in addition to producing a rich corpus, scanning would create a robust record of our all-too-fragile culture.

To wit: the only way the French have been able to rebuild Notre Dame after its catastrophic fire was thanks to the 3D scanning of a lone American.

Parameterized Output

Text-to-CAD output would gain substantial value if it came parameterized. For example, if you were to ask for a 3D printable model of a teacup, you’d be offered sliders to control its diameter, thickness, handle shape, etc. Without parameters for fine-grained control, the results become take-it-or-leave-it. And many would-be customers will simply opt to ‘leave it’.

To this end, it might be useful to train a Large CAD Model (LCM) on the old CAD models of industrial behemoths like Siemens, Bosch, and GE. If data privacy is a concern, such multinationals could partner together in a consortium, the results of which would be proprietary, and only available for a large fee (from which they would profit). Better a walled garden than no flowers at all.

A Pattern Language for Usability

Pattern languages were pioneered in the 1970s by polymath Christopher Alexander. They are defined as an organized and mutually-reinforcing set of patterns, each of which describes a problem and the core of its solution. While Alexander’s pattern languages were targeted at architecture and urban planning, they have been profitably applied to computer programming, and stand to be at least as useful in the domain of generative design.

In the context of text-to-CAD, a pattern language might consist of a set of patterns, where each pattern is defined by a name, a description, an example image or sketch, a context where it applies, a problem statement, a solution statement, and some related patterns. The patterns could be organized hierarchically from more abstract to more concrete. Embodied in these patterns would be best practices with respect to design fundamentals - human factors, functionality, aesthetic preferences, etc. The output of such patterns would thereby be more usable, more understandable (avoiding the black box problem common to machine learning models), and more easy to fine-tune.

Thorough Filtering

As Irene Solaiman recently pointed out in a poignant interview, generative AI is sorely in need of thorough guardrails. A pattern language might produce high quality models, but there is nothing inherent in one to prevent generation of undesirable output. And this is where guardrails come in.

We need to be capable of detecting and denying prompts that request weapons, gore, child sexual abuse material (CSAM), and other objectionable content. Technologists wary of lawsuits might add to this list products under copyright. And if current experience is any guide, such prompts are likely to constitute a significant portion of queries.

An even more difficult challenge is debiasing of output in a culturally sensitive way; steering output away from models that reify inequities implicit in most CAD model corpuses.

In addition, we need widely-shared performance benchmarks, analogous to those that have cropped up around LLMs. After all, if you can’t measure it, you can’t improve it.

____

In conclusion, the emergence of AI-powered text-to-CAD generation presents both risks and opportunities, the ratio of which is still very much undecided. The proliferation of low-quality CAD models and toxic content are just a few things that require immediate attention.

There are several neglected areas where technologists might profitably train their attention. Dataset curation is crucial: we need to track down high-quality models from high-quality sources, and explore alternatives such as scanning of industrial design collections. Parameterization of model output will add value by giving users the ability to fine-tune models until they meet the requirements of their use case. A pattern language for usability could provide a powerful framework for incorporating design best practices. Finally, thorough filtering techniques must be developed to prevent the generation of dangerous content.

I hope the ideas presented here will help technologists avoid the pitfalls that have plagued generative AI to date, and also enhance the ability of text-to-CAD to deliver delightful models that benefit the many people who will soon be turning to them.

Comments